AI Agent Security: Applying Presume Breach and Least Privilege in Microsoft Copilot Studio & Power Automate

AI-backed tools are powerful and easy to develop. Give an agent access and clear instructions, and in many cases, it can just do the job. However, that access doesn’t come without a cost. As with human users, an AI that has too much access weakens your security posture (explained below). Worse, prompt injection attacks make AI models that read data from untrusted sources susceptible in ways humans are not (although humans have their own vulnerabilities).

Current events continue to underscore the importance of managing these resources effectively. A report last July found that 13% of organizations using AI had experienced breaches. A more recent report has raised the figure to over a third (34%) of organizations with AI workloads experiencing an AI-related breach. The pace is accelerating, and modern organizations must take every precaution to run their AI workloads securely.

Fortunately, minimizing damage is already achievable through traditional security best practices. By controlling intelligent systems’ access to tools and data, you can go a long way toward preventing harm if other protections fail. This blog uses the example of an AI email-forwarding agent to demonstrate how to apply the principles of defense in depth, least privilege, and presume breach to an agent.

Two AI Designs: Full Agent vs. Flow Hybrid

For this scenario, we’ll assume that you have multiple specialized customer support teams at your company. When customer requests arrive in a shared customer service mailbox, you need a low-latency solution that analyzes the message and sender, identifies the team whose skills best align with the problem, and forwards the email accordingly. Traditional algorithms cannot do this, so it’s time to break out the AI.

I’ll compare two possible designs:

Full Agentic Solution: The first option is fast, easy, and powerful. All it requires you to do is:

- Create an agent with a tool like Microsoft Copilot Studio.

- Grant the agent access to your inbox and the ability to send emails.

- Set a trigger for email arrival.

- Write a prompt explaining the agent’s job and the criteria for where to forward to.

The agent will quickly handle any incoming mail and ensure it’s addressed appropriately.

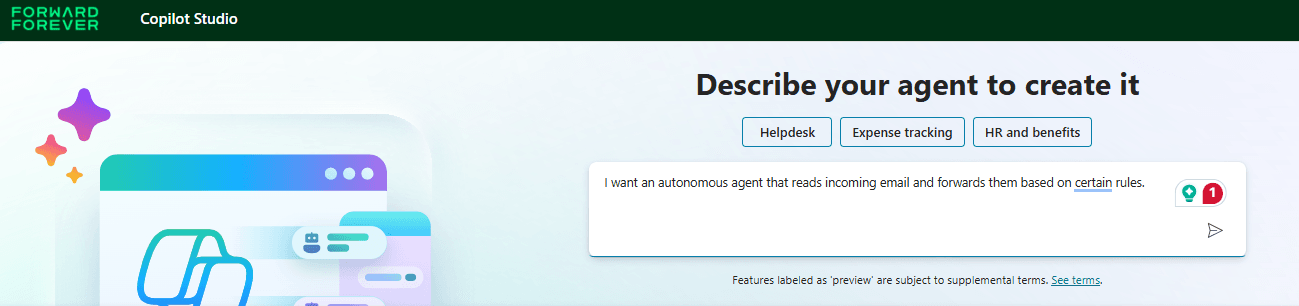

Hybrid Solution: The second option takes only a bit more development time. Still working within Power Platform, we can build an automated Cloud flow, triggered when an email arrives in a shared mailbox that uses either a custom prompt or a classification model to:

- Passes the email content to a Run a Prompt action for classification.

- Receives a code or name in response corresponding to the target group (based on a predefined list).

- Look up the correct email address in Dataverse by that name or code.

- Forwards the message to that address.

For our use case, the effect is the same, cheaper to run, and only barely more effort to build, but the difference comes in what happens if our security is compromised.

When Security Fails: How Each Build Fares Prompt Injection

In the event of a breach, the functions of each build are anything but comparable.

The Full Agentic Solution Compromised: All Power to the Hacker

Now, the first option is already quite secure. Microsoft employs significant protection against numerous relevant attack vectors, including prompt injections, and it won’t be easy for an attacker to exploit your agent.

However, many of these methods are probabilistic defenses that rely on generative agents and only work most of the time (and these same defenses apply to the second solution). Even if they weren’t, security experts have long learned that any IT defense can and will eventually fail. A classic IT security posture aims for Defense in Depth, a practice in which additional layers of security are placed one behind another. An attacker who breaches the first layer may not be successful in breaching the next.

A critical aspect of Defense in Depth is the Presume Breach principle, which treats all identities in a system as potentially hostile. Yes, that identity may now belong to Bob from Accounting, but what if someone gets access to Bob’s credentials? Or Bob has a fight with upper management and decides to enact revenge? Bob’s identity may no longer be safe.

The same happens with a prompt injection attack. An outside attacker may effectively gain control of your agent, causing it to execute any commands they desire.

In the Full Agentic Scenario, such a breach can be devastating. The attacker can effectively read all emails sent to the inbox, including any private customer data or Personally Identifiable Information (PII), and then exfiltrate them by emailing the results to any destination they choose.

Even without read access to the data, they can suborn your inbox, sending harmful messages from within your system, exploiting public or employee trust in the email’s source to phish for additional credentials or publicly damage your company’s reputation. If those phishing attempts bear fruit, the attack could cascade across your entire organization.

You should do everything you can to avoid such a scenario, but you can never be entirely sure such a breach won’t occur. Fortunately, there’s an alternative.

Hacker in a Box: The Effects of Prompt Injection on a Properly Isolated AI

The second scenario employs what security professionals refer to as the principle of Least Privilege. The AI is given only enough access to perform the part of the job that requires artificial intelligence, namely, classifying email content.

Reading the inbox, sending messages, and making decisions based on the classification can all be done by less intelligent systems. Thus, they should be (both for security and cost savings). The AI has no access to data beyond the message itself, and it has no additional tools that attackers can use.

What happens if an attacker executes a successful prompt injection in the second scenario? Most likely, the message is not forwarded (a small loss if it came from an attacker).

The attacker’s only way to use the agent to influence external systems is through its output, and if that output does not match one of the predefined conditions, the cloud flow will ignore it. It may even experience an error and halt, which in a well-maintained system will notify support, who will then investigate and see the original attack message without any harm being done.

The worst an attacker can do, which would require insider knowledge, is get their email forwarded to the wrong queue, and they could do that simply by requesting that kind of support. It doesn’t need a hack.

The Right Choice: Always Presume Breach

Which is the best option? The answer should be obvious. By avoiding giving the agent unnecessary power, we can turn a nightmare security scenario into an incident barely worth mentioning. Just as you wouldn’t give admin rights to an arbitrary app user, you don’t need to grant your agents rights to perform actions that could be handled better by another tool.

Other Defenses: Secure Design Doesn’t Stop Here

There are other ways to further secure the first solution without restricting the agent, such as assigning a second agent to monitor the first and watch for signs of misbehavior. Additional layers like this can be valuable parts of a defense-in-depth strategy.

However, a key difference here lies in probabilistic versus deterministic systems. AI systems are probabilistic, meaning they may respond slightly differently each time to the same input. There’s a chance, in other words, that they’ll behave the way you want, and with robust design, that can be a very good chance. However, when it comes to IT security, good odds aren’t good enough, and attackers are more than willing to try repeatedly on a massive scale until they get the result they want.

Traditional IT systems, on the other hand, are deterministic. There is no random chance that the cloud flow in our example will send to an address outside its list simply because you ask it enough times. Whenever possible, probabilistic systems like AI should act within deterministic constraints, such as traditional IT security limitations. Good security shouldn’t involve throwing dice when there’s another alternative. Sooner or later, the dice might not come up in your favor.

Of course, this isn’t the only attack vector your system needs to defend against. For example, if your AI is external, there are supply chain risks (ensure you have a trusted, secure host for your AI), or your email-based solution may be subject to spam attacks that could overwhelm your system and compromise availability (consider robust DoS protection and getting your support requests through a more secure channel than email, if possible).

However, it’s an essential part of what should be a well-rounded, multi-layer solution to ensure the security and integrity of your business. AI is a rapidly evolving technology that requires innovative solutions and out-of-the-box thinking across many aspects of our lives, but for some problems, we don’t need to reinvent the wheel. We need to apply the tools we already have.